1. Background

TheEU AI Act (Regulation (EU) 2024/1689) is the world's first comprehensive legal framework for artificial intelligence, aiming to promote the development of trustworthy artificial intelligence through risk hierarchical management, while safeguarding safety and fundamental rights.

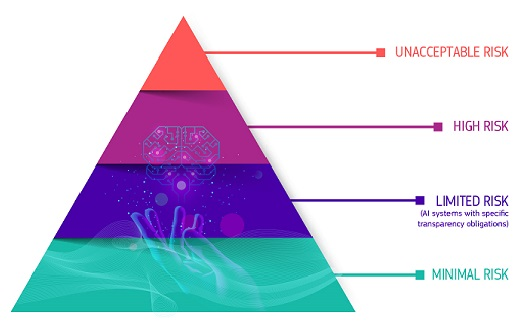

2. Risk classification

- Unacceptable risks: Prohibited applications, such as social scoring, real-time face recognition in public places, etc.

- High risk: Involves healthcare, education, public infrastructure, law enforcement, and other fields, and needs to meet compliance requirements such as security, transparency, and human supervision.

- Limited risk: Users need to be clearly informed about the use of AI.

- Minimal risk: such as spam filters, etc., without additional supervision.

- Additional transparency and security assessment requirements are imposed on general-purpose artificial intelligence models (GPAI), and extremely high-performance models are subject to stricter oversight.

3. Regulatory mechanism

TheEU AI Office (AI Office) is responsible for the overall implementation of regulations and general AI supervision, and the market regulators of member states are responsible for national compliance inspections, and are governed by the European Artificial Intelligence Commission, scientific expert groups and advisory forums.

4. Supporting measures

The GPAI Code of Practice has been launched to guide enterprises to establish self-discipline mechanisms in terms of transparency, security, and copyright protection, and help model providers adapt to regulatory requirements in advance.

See the official website for details:

https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai